By Jordan Francis, Beth Do, and Stacey Gray, with thanks to Dr. Rob van Eijk and Dr. Gabriela Zanfir-Fortuna for their contributions.

California Governor Gavin Newsom signed Assembly Bill (AB) 1008 into law on September 28, amending the definition of “personal information” under the California Consumer Privacy Act (CCPA) to provide that personal information can exist in “abstract digital formats,” including in “artificial intelligence systems that are capable of outputting personal information.”

The bill focuses on the issue of applying existing CCPA privacy rights and obligations to generative AI and large language models (LLMs). However, the bill introduces ambiguities that raise a significant emerging question within the privacy and AI regulatory landscape: whether, and to what extent, personal information exists within generative AI models. The legal interpretation of this question (whether “yes,” “no,” or “sometimes”) will impact how concrete privacy protections, such as deletion and access requests, apply to the complex data processes in generative AI systems.

Prior to its signing, the Future of Privacy Forum (FPF) submitted a letter to Governor Newsom’s office, highlighting the ambiguities in the bill, summarizing some preliminary analysis from European data protection regulators concerning whether LLMs “contain” personal information, and recommending that California regulators collaborate with technologists and their U.S. and international counterparts to share expertise and work toward a common understanding of this evolving issue.

This blog explores:

- The complex policy, legal, and technical challenges posed by the enactment of AB 1008 regarding whether generative AI models contain personal information;

- Evolving perspectives from global regulators and experts on this issue; and

- The implications of various approaches for privacy compliance and AI development.

AB 1008 Highlights a Complex Question: Do Generative AI Models “Contain” Personal Information?

AB 1008 amends the definition of “personal information” under the CCPA to clarify that personal information can exist in various formats, including physical formats (e.g., “paper documents, printed images, vinyl records, or video tapes”), digital formats (e.g., “text, image, audio, or video files”), and abstract digital formats (e.g., “compressed or encrypted files, metadata, or artificial intelligence systems that are capable of outputting personal information”). Specifically, the inclusion of “abstract digital formats” and “artificial intelligence systems” raises the complex question of whether generative AI models themselves can “contain” personal information.

Generative AI models can process personal information at many stages of their life cycle. Personal information may be present in the collection of data used for training datasets, often sourced from publicly available information that is exempt from U.S. privacy laws, as well as in the training processes. Personal information can also be present in the input and output of a generative AI model when it is being used or trained. For example, asking an LLM such as ChatGPT, Claude, Gemini, or Llama, a question such as “Who is Tom Cruise?”, or “When is Tom Cruise’s birthday?” should generate a response that contains personal information, and this can be done for many lesser-known public figures.

Does this mean personal information exists “within” the model itself? Unlike typical databases that store and retrieve information, LLMs are deep neural networks trained on vast amounts of text data to predict the next word in a sequence. LLMs rely on the statistical relationships between “tokens” or “chunks” of text representing commonly occurring sequences of characters. In such a model, the tokens comprising the words “Tom” and “Cruise” are more closely related to each other than the tokens comprising “Tom” and “elevator” (or another random word). LLMs use a transformer architecture, which enables processing input text in parallel and captures long-range dependencies, allowing modern LLMs to engage in longer, more human-like conversations and greater levels of “understanding.”

While the question may seem academic, the answer has material compliance implications for organizations, including for responding to deletion and access requests (discussed further below). An earlier draft version of AB 1008 would have provided that personal information can exist in “the model weights of artificial neural networks,” and legislative history supports that AB 1008’s original intent was to address concerns that, “[o]nce trained, these [GenAI] systems could accurately reproduce their training data, including Californians’ personal information.”

Despite the stated goal of clarifying the law’s definition of personal information, ambiguities remain. The statute’s reference to AI “systems,” rather than “models,” could impact the meaning of the law, and “systems” is left undefined. While a “model” generally refers to a specific trained algorithm (e.g., an LLM), an AI “system” could also encompass the model architecture, including user interfaces and application programming interfaces (APIs) for interacting with the model, monitoring model performance and usage, or periodic fine-tuning and retraining the model. Additionally, legislative analysis suggests the drafters were primarily concerned with personal information in a system’s output. The Assembly Floor analysis from August 31 suggests that organizations could comply with deletion requests by preventing their systems from outputting personal information through methods like:

- 1. Filtering and suppressing the system’s inputs and outputs.

- 2. Excluding the consumer’s personal information from the system’s training data.

- 3. Fine-tuning the system’s model in order to prevent the system from outputting personal information.

- 4. Directly manipulating model parameters in order to prevent the system from outputting personal information.

This emphasis on the output of generative AI models, rather than the models themselves, suggests that the bill does not necessarily define models as containing personal information per se. Addressing these policy ambiguities will benefit from guidance and alignment between companies, researchers, policy experts, and regulators. Ultimately, it will be up to the Attorney General and the California Privacy Protection Agency (CPPA) to make this determination through advisories, rulemaking, and/or enforcement actions. Though the CPPA issued a July 2024 letter on AB 1008, the letter does not provide detailed conclusion or analysis on the application of the CCPA to the model itself, leaving room for additional clarification and guidance.

Why Does it Matter? Practical Implications for Development and Compliance

The extent to which AI models, either independently or as part of AI systems, contain personal information could have significant implications for organizations’ obligations under privacy laws. If personal information exists primarily in the training, input, and output of a generative AI system, but not “within” the model, organizations can implement protective measures to comply with privacy laws like the CCPA through mechanisms like suppression and de-identification. For example, a deletion request could be applied to training datasets (to the extent that the information remains identifiable), or applied to prevent the information from being generated in the model’s output through suppression filters. Leading LLM providers are able to offer this option, such as in ChatGPT’s feature for individuals to request to “Remove your personal data from ChatGPT model outputs.”

However, if there were a legal interpretation that personal information can exist within a model, then suppression of a model’s output would not necessarily be sufficient to comply with a deletion request. While a suppression mechanism may prevent information from being generated in the output stage, such an approach requires that a company retain personal information as a screening mechanism in order to effectuate the suppression on an ongoing basis. An alternative option could be for information to be “un-learned” or “forgotten,” but this remains a challenging feat given the complexity of an AI model that does not rely on traditional storage and retrieval, and the fact that models may continue to be refined over time. While researchers are beginning to address this concept, it remains at an early stage. Furthermore, there is growing research interest in architectures that separate knowledge representation from the core language model.

Other compliance operations could also look different: for example, if a model contains personal information, then the purchase or licensing of a model would have the same obligations that typically go along with purchasing or licensing large databases of personal information.

Emerging Perspectives from European Regulators and Other Experts

While regulators in the United States have mostly not yet begun to address this legal issue directly, some early views are beginning to emerge from European regulators and other experts.

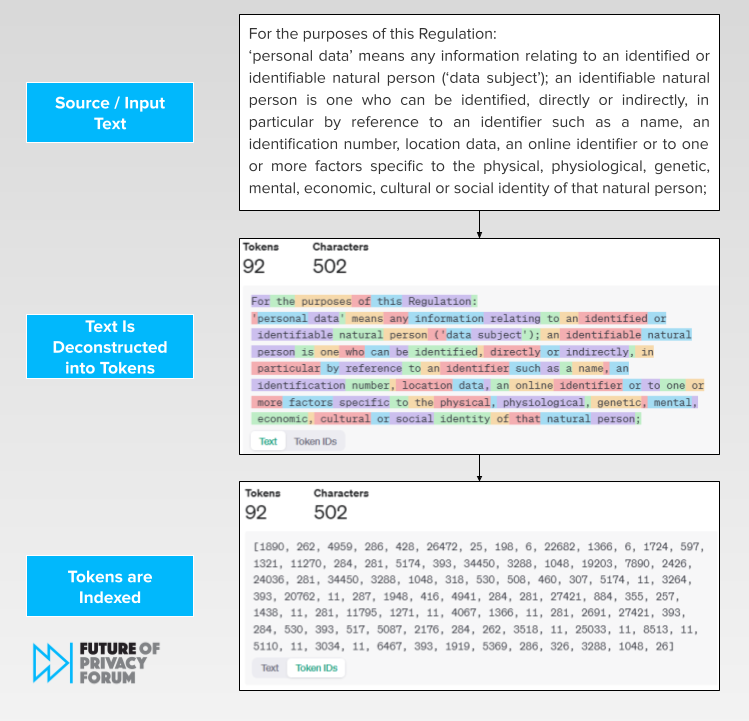

Preliminary Perspectives that Large Language Models Do Not Contain Personal Information: In July 2024, the Hamburg Data Protection Authority (Hamburg DPA) in Germany released an informal discussion paper arguing that LLMs do not store personal information under the GDPR because these models do not contain any data that relates to an identified or identifiable person. According to the Hamburg DPA, the tokenization and embedding processes involved in developing LLMs transform text into “abstract mathematical representations,” losing “concrete characteristics and references to specific individuals,” and instead reflect “general patterns and correlations derived from the training data.”

Clik here to view.

Fig. 1: Example indexed values of tokens created using OpenAI’s tokenizer tool (setting: GPT-3 (Legacy)).

The Hamburg DPA added that LLM outputs “do not store the texts used for training in their original form, but process them in such a way that the training data set can never be fully reconstructed from the model.”

Similarly, a 2023 guidance document from the Danish DPA (Datatilsynet) on AI use by public authorities also assumed that an AI model “does not in itself constitute personal data, but is only the result of the processing of personal data.” The document notes that some models can be attacked in ways that re-identify individuals whose information existed in the training data. While this would be considered a data breach, this risk “does not, in the opinion of the Danish Data Protection Agency, mean that the model should be considered personal data in itself.”

The Irish Data Protection Commission’s (DPC) 2024 guidance on generative AI acknowledged that some models may unintentionally regurgitate passages of personal training data, a key point raised by the sponsor of the California law. However, comprehensive reviews are underway, with Ireland’s DPC seeking an opinion from the European Data Protection Board (EDPB) on “issues arising from the use of personal data in AI models.”

Recently, the EDPB’s ChatGPT Taskforce Report noted that the processing of personal information occurs at different stages of an LLM’s life cycle (including collection, pre-processing, training, prompts, output, and further training). While it did not address personal information within the model itself, the report emphasized that “technical impossibility cannot be invoked to justify non-compliance with GDPR requirements.”

Opposing Perspectives that LLMs Do Contain Personal Information: In contrast, in a detailed analysis on this issue, technology lawyer David Rosenthal has argued that, according to the “relative approach” espoused by the Court of Justice of the European Union (CJEU Case C‑582/14), the question of whether an LLM contains personal information should be assessed solely from the perspectives of the LLM user and the parties who have access to the output. Whether a data controller can identify a data subject based on personal information derived from an LLM is not material; the information is considered personal information as long as the data subject can be identified or is “reasonably likely” to be identified by the party with access and that the party with access has an interest in identifying the data subject. Consequently, a party that discloses information that is personal information for another data subject is classified as disclosing personal information to a third party and must comply with GDPR.

Conversely, if an LLM user formulates a prompt that cannot be reasonably expected to generate output relating to a specific data subject—or if those with access to the output do not reasonably have the means to identify those data subjects and lacks the interest in doing so—then there is no personal information and thus data protection requirements do not apply. Other commentators that disagree with the Hamburg DPA’s discussion paper have focused on the reproducibility of training data, likening the data stored in an LLM to encrypted data.

What’s Next

Addressing these policy ambiguities will benefit from guidance and alignment between companies, researchers, policy experts, and regulators. Ultimately, it will be up to the California Attorney General and CPPA to make a determination under AB 1008 through advisories, rulemaking, or enforcement actions. Greater collaboration between regulators and technical experts may also help build a shared understanding of personal information in LLMs, non-LLM AI models, and AI systems and promote consistency in data protection regulation.

Even if California’s policy and legal conclusions ultimately differ from conclusions in other jurisdictions, building a shared (technical) understanding will help assess whether legislation like AB 1008 effectively address these issues and comports with existing privacy and data protection legal requirements, such as data minimization and consumer rights.